The virus of myside bias is spreading among cognitive elites – Interview with Keith E. Stanovich

Inhalt - Contents

Introduction – exploration of rational thought

Keith E. Stanovich is one of the most cited contemporary psychologists. For example, his classic article from the field of developmental psychology on the „Matthew effect in education“ has been quoted more than 1,500 times in the scientific literature. – In recent times, the scientist and his team have focused on human rationality and their special role as cognitive competence in addition to intelligence. In this context, Stanovich coined the term dysrationalia to describe the tendency of individuals to think and act irrationally even though they possess sufficient intelligence. In their last book „The Rationality Quotient: Toward a Test of Rational Thinking“, published in 2016, Stanovich and his colleagues developed the basic concept of a comprehensive test of rational thinking.

Key psychological experts are convinced of the validity of this concept, as a statement by Daniel Kahneman of Princeton University – winner of the 2002 Nobel Prize in Economics – shows:

The Rationality Quotient is a significant advance in the psychology of rationality. It presents the best analysis of the cognitive errors in the scientific literature and makes compelling case for measuring rationality indepentently of intelligence.

Daniel Kahneman, 2016

Some of Stanovich’s psychological colleagues even deduce from his work on rationality that irrational thinking can be effectively limited on the part of cognitive elites. Keith E. Stanovich now contradicts this conclusion in his new book, which is about to be published (Stanovich, K. E. 2021. The Bias That Divides Us: The Science and Politics of Myside Thinking). In his view, the so-called myside bias often impedes rational thinking and action, especially among political and intellectual elites.

Fortunately, I had the opportunity to get to know his analyses in detail and to discuss with Keith E. Stanovich in an interview the consequences for the management of current global crises.

See for yourself:

Question #1 – motivation

I would like to start with a question that probably plays a big role in the introduction of your new book:

How come that after dealing with numerous different biases you dedicate a whole book to the myside bias? In the past, you and your colleagues in your lab had already focused on studying individual differences in myside bias. – Does this bias have a particularly harmful effect?

Keith E. Stanovich #1 – pervasiveness of myside bias

Yes, my group had investigated myside bias since 2000, but my special focus on it began in 2016 after we published The Rationality Quotient. The November 8, 2016 United States presidential election occurred, and the nature of my email suddenly changed. I began to receive many emails that had the implication that I now had the perfect group to study—Trump voters—who were obviously irrational in the eyes of my email correspondents (most of them university faculty in the social sciences).

Subsequent to the election, I also received many invitations to speak. Several of these invitations came with the subtle (or sometimes not-so-subtle) implication that I surely would want to comment—after first giving my technical talk, of course—on the flawed rational thinking of the voters who had done this terrible thing to the nation. One European conference that solicited my participation had as its theme trying to understand the obviously defective thinking not only of Trump voters, but of Brexit voters as well. I—the author of a rational thinking test—was seen as the ideal candidate to give the imprimatur of science to this conclusion.

I wrote an essay explaining to all of these correspondents that I could not give them what they want—that on any definition of rationality, there was no evidence that Trump and Clinton voters were significantly different.

After writing the essay, it occurred to me that my correspondents perfectly illustrated a finding of ours regarding myside bias: that it was not attenuated by intelligence or education. My correspondents were intelligent and highly educated faculty, but their confidence that the Trump voters (or the Brexit voters) were irrational was a perfect illustration of myside bias. They seemed to think that intelligence leads to ideological “correctness”. Such a view is itself highly irrational.

The pervasiveness of myside bias, even among cognitive elites, was what led me to write my new book.

Question #2 – characteristics and appearance

You probably refer to your essay „Were Trump Voters Irrational“, published in September 2017 on Quillette. Aren’t you?!

Some time ago – when reading the essay – I have to admit – I was shocked at first when you demonstrated that there is no single „party of science“:

You explain with vivid examples how apparently supporters of both parties differ in what scientific findings they accept and what scientific facts do not fit their moral concepts and are therefore ignored. What the supporters of all parties do not differ in is, in your opinion, the fact that they systematically cognitively distort what does not fit their group identity. My shock has made me clearly feel, on the one hand, my own myside-bias and, on the other, the omnipresence of this distortion.

So with a view to your new book, the pressing question seems to be: Where does this myside bias regularly occur? – How can it be identified in individuals and in group behavior?

Keith E. Stanovich #2 – omnipresence of myside bias

Yes, you are correct on many counts. I am referring to my 2017 essay in Quillette in which I analyzed voter irrationality claims and found that the literature contained no evidence indicating that the Trump voters would have been any less rational (or less intelligent, or less knowledgeable) than the Clinton voters. In the same essay, I showed that that conclusion is pretty much parallel to the finding that neither political party in the United States is the party of science. They both accept and reject science depending upon whether the conclusion aligns with the political policy that maps their ideological position. Thus, you are completely correct in summarizing the finding as that supporters of all ideologies distort their understanding of scientific findings that do not fit their group identity.

And you are right to react to these findings as suggesting the omnipresence of myside bias. That is one of the key things that I outline in the new book. Research has shown that myside bias is displayed in a variety of experimental situations: people evaluate the same virtuous act more favourably if committed by a member of their own group and evaluate a negative act less unfavourably if committed by a number of their own group; they evaluate the identical experiment more favourably if the results support their prior beliefs than if the results contradict their prior beliefs; when searching for information, people select information sources that are likely to support their own position; and even when told to present balanced arguments, people generate more arguments in favor of their own beliefs.

Even the interpretation of a purely numerical display of outcome data is tipped in the direction of the subject’s prior belief. Likewise, judgments of logical validity are skewed by people’s prior beliefs. Valid syllogisms with the conclusion “therefore, marijuana should be legal” are easier for liberals to judge correctly and harder for conservatives; whereas valid syllogisms with the conclusion “therefore, no one has the right to end the life of a fetus” are harder for liberals to judge correctly and easier for conservatives. I will stop here, because I have not even begun to enumerate the many different paradigms that psychologists have used to study myside bias. As I show in my new book, The Bias That Divides Us, myside bias is not only displayed in laboratory paradigms, but it characterizes our thinking in real life as well. In early May 2020, demonstrations took place in several US state capitols to protest against mandated stay-at-home policies, ordered in response to the Covid-19 pandemic. Responses to these demonstrations fell strongly along partisan lines—one partisan side deploring the societal health risks of the demonstrations and the other supporting the demonstrations. Only a few weeks later, these partisan lines on large public gatherings completely reversed polarity when new mass demonstrations occurred for a different set of reasons.

Sigfried Hecker, Director of Los Alamos National Laboratory with Secretary Hazel O’Leary and President William Clinton (Photo rights: History in HD – Unsplash)

Question #3 – real-world outcomes

You have just pointed out that with the myside-bias you are by no means questioning a mere laboratory phenomenon, but something real that influences our thinking in real life. In your last book „The Rationality Quotient“ you mentioned this aspect several times. For example, you had compiled an extensive list of „real-world outcomes“ of biases. How will it be in your new book? How far will you „step out of the lab“ and analyze real-world outcomes of the myside-bias?

Keith E. Stanovich #3 – ambiguous situations spawn myside bias

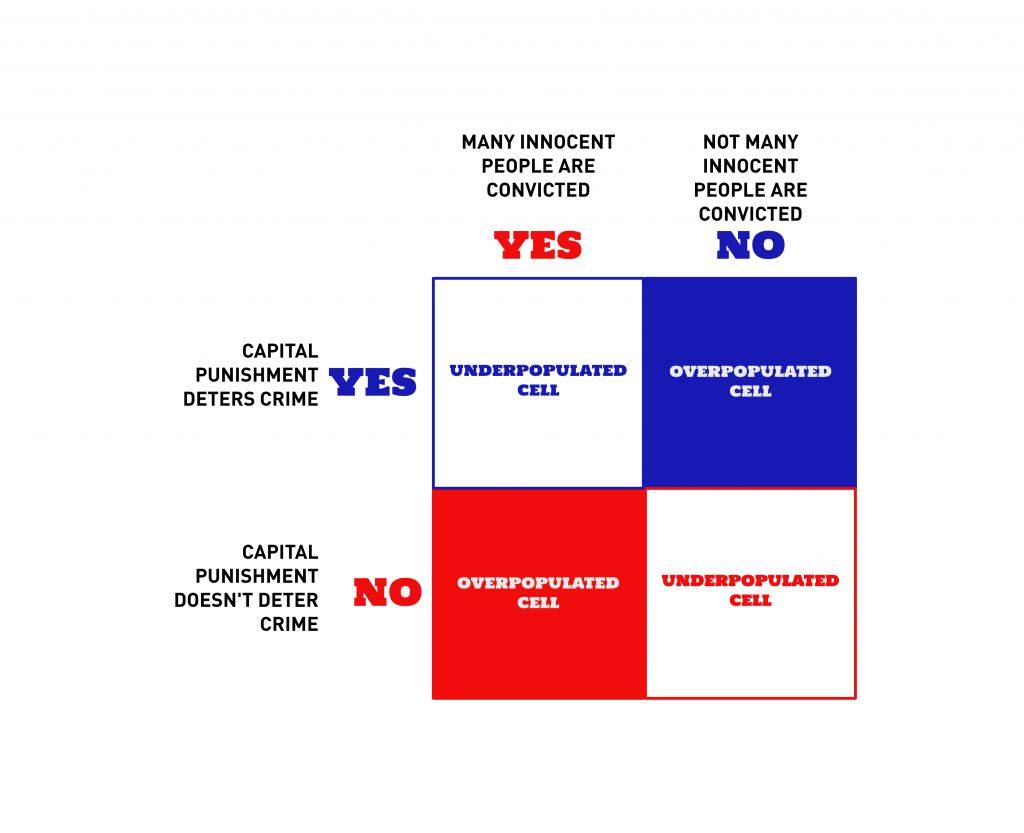

I use examples from a variety of domains, but the most fertile domain is in what we might broadly call politics, government policy, and ideology. I describe, for example, debates about capital punishment in the United States. There are many people who believe that capital punishment deters crime and others that think that it does not. There are people who think that many innocent people are convicted of crimes and other people who think that not many innocent people are convicted of crimes. Note however, that in the 2 x 2 matrix of these beliefs, two of the cells are overpopulated and two are underpopulated. Many people think that capital punishment deters crime and that not many innocent people are convicted of crimes; and many people think that capital punishment fails to deter crimes and that many innocent people are convicted of crimes. However, almost no one has the two other conjunctions of beliefs, both of which are very plausible: that capital punishment deters crime but that many innocent people are convicted of crimes; or that capital punishment fails to deter crime but that very few innocent people are convicted. This suggests that the evaluations were not due to assessing, independently, the evidence on each one. Instead, the evaluation of the two different propositions was linked, via myside bias, to convictions about capital punishment.

I discuss a study by Kopko and colleagues in which they found that rulings on the adequacy of challenged ballots in elections were infected by partisan bias. There was an additional finding from their study that is of some importance, also. The researchers examined whether performance changed based on whether the rules for classifying the ballots were specific or ambiguous. They found that the more ambiguous the rules, the more myside bias was observed. Although this may not seem to be a surprising result—because it would seem to follow from many different frameworks within psychology, such as signal detection theory—it is an important result nonetheless.

It is important because it is critical to realize that, when it comes to controlling our own myside reasoning, ambiguity is not our friend. It is not encouraging to know that ambiguous situations spawn myside bias. Complexity magnifies ambiguity, and the ever-proliferating internet-fueled universe of information we are experiencing increases the complexity of the communicative world. Too much information maximizes the mysided selection of evidence. The more evidence there is, of all types—good and bad; on this side and on that—the easier it is to select from it with a myside bias. Both the complexity and the increasing amount of social exchange that occurs via the internet (social media, etc.) makes verifying what is actually going on in the world much more difficult in the present age.

We certainly have seen this operating in the context of the Covid-19 pandemic. Particularly at the beginning of the pandemic, but even now, there are so many unknowns that it is impossible to say which specific public health policies were correct at what specific time. Across the world, countries tried various different strategies and those different strategies were of course crossed (in the sense of experimental design) with all of the various demographic differences between countries (some are older and some are younger) as well as with their geographical differences (some are islands). If we add to these the various governmental differences between countries (some are authoritarian and others are democracies), it was very hard to see what public policy strategies were superior to others in the long-term (that is, counting up not only the toll of death rates but also future economic damage which cashes out in health problems as well). It was a situation of great complexity calling for us all to temper our conclusions about various public policy choices. Nevertheless, partisans rushed into this complexity to declare that political entities that were on their side were making the right choices and political entities opposed to their partisan position were making the wrong choices. We saw this very strongly in the United States where of course we had 50 different states with varying policies to make the biased selection that is at the heart of mysided reasoning even easier!

Question #4 – prevention of myside bias

You have just described ambiguities, the obscurity of situations and the complexity of the facts as a context that favors biased decision-making. How do you analyze this in your book:

Do you argue that smart politicians deliberately use the unclear situation to push through decisions that would otherwise be difficult to legitimize?

Or do decision-makers themselves unconsciously become victims of their own biases?

In the first case, politicians would deliberately exploit the myside bias of their fellow citizens to achieve their goals and their one-sided interests. In the second case, politicians themselves would fall victim to the myside bias – instead of making rational decisions, they would unconsciously „rationalize“ decisions in a psychoanalytical sense.

Both models of the appearance of myside bias in the context of important political decisions would be bad:

What can we basically do to prevent this kind of bias?

Keith E. Stanovich #4 – six recommendations

The answer is that both of your models of the operation of myside bias in politics are true. Both are taking place. Certainly politicians themselves are victims of their own myside biases, but partisan politics itself encourages politicians to use myside partisanship as a weapon to attain their goals – goals that are sometimes antithetical to the desires of their own party members. Let me contextualize this by first listing some of the sections in the final chapter of my book that deal with what we can do to mitigate the effects of myside bias. In that chapter, I discuss:

- How to avoid my side bias by realizing that we differ from our opponents not because we know some facts that they do not, but because our political opponents disagree with us because of legitimate value differences.

- Personally, we can decrease our myside bias by realizing that, even within ourselves, we hold conflicting values.

- Realizing that exposure to social media is causing an obesity epidemic of the mind.

- Decreasing the amount of myside bias that we display by taking on board the uncomfortable fact that we actually didn’t think our way to the ideological convictions that drive our myside bias – that our convictions come, thoughtlessly, from our genetic propensities and from our social milieu.

- Be aware that partisan tribalism is making you more mysided then political issues are. Avoid myside bias by decoupling specific beliefs that you have from the large-scale positions of political entities, such as political parties, that you might identify with. That is, avoid activating the type of convictions that lead to myside bias by resisting the unprincipled bundling of issues done by political parties.

- Oppose identity politics because it magnifies myside bias.

2 and #5 above are particularly related to your point that “politicians deliberately exploit the myside bias of their fellow citizens to achieve their goals and their one-sided interests”. Especially point #5.

Myside bias is driven by the convictions that we have, but many of those convictions are driven by partisanship. Some of the mysided behavior generated by partisanship is, in a certain sense, unnecessary. By unnecessary, I mean that we would not have conviction-based beliefs on many issues had we not known the position taken by our partisan group. Hyper-partisanship turns testable prior beliefs that we might have held with modest confidence into protected values that we cling to with the strength of a conviction. Often, we would never have arrived at the conviction by independent thought.

Research has shown that most people are not very ideological. They do not think much about general political principles and they hold positions on particular issues only when the issues tend to affect them personally. Their positions from issue to issue tend to be inconsistent and are not held together by a coherent political worldview that they can consciously articulate. Studies tend to find that only for people who are deeply involved in politics and/or extremely highly educated and immersed continuously in high-level media sources do positions on issues consistently cohere in a way that looks like an ideology.

In fact, both sides in our partisan debates often point out—convincingly—how positions on the other side are aligned in a seemingly incoherent manner, and the tactic is often used quite effectively. In the abortion debate, it is common for pro-choice advocates to point out the inconsistency of the pro-life advocate who wants to preserve the life of the unborn but not the life of someone on death row. This argument is often effective and compelling, when it is delivered. However, so is the converse argument from the pro-life advocate who points out the inconsistency of pro-choice advocates who are against the death penalty. The latter seem to be accepting of the deaths of the unborn (increasingly, in the Democratic Party at least, right up until the point of birth) but not accepting of the deaths of criminals. The pro-choice advocate often counters that innocent people have been executed, but their opponents then point out that all of the unborn are innocent. Both pro-choice and pro-life advocates arguing for an inconsistency in their opponents seem to have compelling points. The normative recommendation here would be that both sides moderate their opinion on at least one of these issues, if not both. Both groups hold inconsistent positions on these two issues because their respective parties have bundled them together (the Democrats have bundled pro-choice with anti-capital punishment and the Republicans have bundled pro-life with pro-capital punishment). These two issues are much, much more independent in principle than one would expect from the tight bundling of the issues by partisan thought leaders.

Some of the bundling in our current politics seems extremely strange. Supporters of animal rights wish to extend the umbrella of moral concern to those less sentient and less biologically complex than humans. It would seem then, that animal rights activists would be pro-life proponents as well. The fetus, being less sentient and biologically complex, would seem to be in the forefront of organisms needing the extended moral umbrella that is at the heart of the animal rights stance. Yet when we look empirically at the distribution of political attitudes, we find that the correlation goes, somewhat shockingly, in the opposite direction: people who support protections for animals that are much less complex and sentient than humans are more likely to support aborting unborn humans. Some vegans, for example, who will not eat honey because of the damage it might do, morally, to the bees—turn out to be vociferous supporters of unrestricted abortion.

We can argue about whether or not the correlation here should be negative, but it is hard to think of one coherent moral principle that links these two attitudes (support for veganism and abortion) in a positive direction. To be clear, I am not saying that any of the positions on these individual issues (animal rights, veganism, abortion) are wrong or irrational. I am only arguing the much weaker point that the clashing juxtaposition of moral judgments (the unexpected correlations between positions) seems more likely to have resulted from the people accepting the partisan bundling of these issues than it does from independent thought about each.

That the bundling of issue positions is done for reasons of political expediency is strongly suggested by the speed and magnitude of changes in the policy positions that can happen within the parties. It took only a few years for the Republicans to go from a party critical of budget deficits when president Obama was in office, to a party very accepting of massive deficits under the Trump administration. It took not much longer for the Democrats to go from being a party who opposes illegal immigration because it depresses the wages of low-skilled workers to being the party of sanctuary cities and the advocates of every undocumented person who crosses the border. These shifts are caused by changes in electoral strategy, not by the application of political principles.

This fact can be used to tamp down our own myside bias. Understand that your political party is functioning like a social identity rather than an abstract ideology, and it is bundling issues to serve its own interests, not yours.

Question #5 – how to solve global problems

First I would like to try to recapitulate your analysis briefly:

From your point of view, we as actors could fight our myside-bias,

(1) when we realize that in disputes with opponents we are not wrestling about facts, but about legitimate value differences and thus ultimately about opposing legitimate interests

(2) by recognizing that we as actors are trying to fulfill values and interests that are inconsistent with each other

(3) by realizing that our power of judgment is limited by an epidemic of influence from the social media

(4) that we recognize that the beliefs that drive our myside bias are basically influenced by two fundamentals: Through biological evolution – through the genetic preconditions of our thinking – and through our social evolution and sozialisation

(5) that we recognize that we are exposed to party tribalism, which influences us to adopt value positions and opinions that have been arbitrarily bundled

(6) by recognizing the one-sidedness of identity politics.

Have I summarized your analysis correctly?

The human species is increasingly threatened by its inability to make rational decisions. As you show, this problem often stems from value orientations, value conflicts, and manipulated value attitudes.

Against this background, how do you see our chances for the future to solve problems such as the current pandemic, climate change, and the increasing environmental disasters caused by it and so on? Will people in the coming years and decades learn to deal with conflicts of values? Are there means to strengthen the necessary rational and reflective thinking of individuals and decision-makers in groups – effectively and in time?

Or are we, as the moral psychologist Jonathan Haidt has figuratively paraphrased it, „emotional dogs“, whose rational reflections play only a minor role by wagging our „rational tail“ more or less ineffectively with regard to global problems?

Keith E. Stanovich #5 – problems might not be solved by more knowledge – but by the insight into one’s own convictions

Yes, you have summarized my recommendations in chapter 6 correctly. Jonathan Haidt developed his dual process model, where rational reflections are considerably subordinated to System 1 emotions, in the context of moral reasoning. His model applies quite well to moral reasoning, but rational reflections can play a larger role in containing some of the other biases in the heuristics and biases literature (framing biases, base rate neglect, anchoring biases, vividness biases, etc.). However, the bad news in my book is that myside bias is more like the case of moral reasoning than like other biases in the literature in that it is pretty well described by Haidt’s model.

Myside bias is driven by the strength of convictions on specific issues. Those convictions, however, have as their distal cause innate psychological propensities and social learning throughout a person’s lifetime – neither of which are under much control by the individual. The convictions are memes that the person has, for the most part, not acquired reflectively, but instead acquired because they were ideas in the social milieu that fit temperamental propensities. The only good news here is recommendation #4. If people realize that the convictions that they hold are less consciously chosen than they thought, they may hold those convictions with less intensity. Lessening the intensity of convictions will reduce myside bias.

Finally, regarding your question about the current pandemic and climate change, I would again point to recommendation #2 and #5 is providing the answer here. Both of these issues have become politicized along tribal lines (#5) and that has hurt our ability to approach both problems. Regarding the pandemic in the United States, as I noted above, in early May 2020, demonstrations took place in several US state capitols to protest against mandated stay-at-home policies. Most of the mainstream media condemned of those demonstrations as a threat to public health. However, when new demonstrations on a totally different political topic occurred within days of the first set, the mainstream media praised the new demonstrations even though they were an equal threat to public health. This had the effect, among the public, of decreasing the public’s trust and confidence in the mainstream media. The myside bias displayed has hurt our ability to transmit information to the public that will be trusted. And of course our governments and politicians do the same thing – inconsistently condemning and condoning equivalent actions based on the target group engaging in the actions.

The ultimate answer is to keep something like responses to the pandemic from becoming politicized in the first place. Somewhat the same story holds in the case of climate change. In the United States, when Al Gore began to propogate the issue of climate change to a much larger public, I remember that I thought that that was such a good thing because now the general public would become more informed. But over the years I became horrified as I saw that the fact that Al Gore had become the “front man” for climate change concern had become a negative thing. The fact that he was a well-known politician led to the issue becoming politicized along tribal/partisan lines. I now realize that it would have been much better to have informed the public more slowly through spokespersons who were more neutral politically, even if that would have slowed the dissemination of information about climate change. The slower dissemination would have been more than made up for if we could have disseminated the information in a way that did not invite partisanship.

And finally, I would recommend invoking recommendation #2 above. Avoid engaging in what political scientist Arthur Lupia terms the error of transforming value differences into ignorance—that is, mistaking a dispute about legitimate differences in the weighting of the values relevant to an issue for one in which your opponent “just doesn’t know the facts.”

Climate change provides an example. Pollution reduction and curbing global warming often require measures which have as a side effect restrained economic growth. The taxes and regulatory restraints necessary to markedly reduce pollution and global warming often fall disproportionately on the poor. For example, raising the cost of operating an automobile through the use of congestion zones, raised parking costs, and increased vehicle and gas taxes restrains the driving of poorer people more than that of the affluent. Likewise, there is no way to minimize global warming and maximize economic output (and hence, current jobs and prosperity) at the same time. People differ on where they put their “parameter settings” for trading off environmental protection for the future versus current economic growth. Differing parameter settings on issues such as this are not necessarily due to lack of knowledge. They are the result of differing values, or differing worldviews.

It is not surprising that the differing values that people hold may result in a societal compromise that pleases neither of the groups on the extremes. It is the height of myside bias to think that if everyone were more intelligent, or more rational, or wiser, then they would put the societal settings exactly where you put your own settings now. There is in fact empirical evidence showing that more knowledge or intelligence or reflectiveness does not resolve zero-sum value disagreements such as these.

When liberals see conservatives opposing green initiatives, they accuse conservatives of not understanding the implications of global warming for the future. When conservatives see liberals supporting expensive green initiatives, they accuse liberals of not understanding the facts about how declines in economic growth translate into more poverty and economic hardship for the most vulnerable members of our society. In both cases, these are mistaken characterizations. Most conservatives do care about the state of the environment and about the implications of global warming. Most liberals do understand that economic growth prevents hardship and reduces poverty. Often, both groups do know the facts—they just give different weightings to the value trade-off in play: concern about the effects of future global warming and the maintenance of maximum economic growth. The more we realize that, for any given issue, there are value trade-offs to consider, the less mysided we will be.

The many correspondents upset with the 2016 election results who contacted me after the publication of my book on rational thinking that year are members of a worldwide cognitive elite. Those correspondents thought (wrongly, as I demonstrate in my book) that any study of human reasoning would provide fodder for their view that the deciding voters in both Great Britain (Brexit) and the United States (the presidential election) in that year were irrational. My correspondents seemed to think that political disputes are entirely issues of rationality or knowledge acquisition—and that superior general (or specific) knowledge automatically confers correctness in the political domain. The thinking seemed to have been that “well, as an academic, I am a specialist in rationality and knowledge, and therefore that expertise confers on me special wisdom in the domain of politics.” In short, my correspondents seemed to be making the error Arthur Lupia talks about: accusing your opponents of “not knowing the facts” just because you want your worldview to prevail.

As cognitive elites, we can tame our myside bias by realizing that, in many cases, our thinking that certain facts (that, due to cherry-picking, we just happen to know) are shockingly unknown by our political opponents is really just a self-serving argument about knowledge that is used to mask the fact that the issue we are talking about is a value conflict. Our focus on the ignorance of our opponents is simply a ruse to cover up our conviction that our own value weightings should prevail—not that the issue is one that will be resolved by more knowledge.

# 6 – Conclusion

Mr Stanovich – thank you for your patience and detailed clarification!

Links

Keith E. Stanovich (September 28, 2017): „Were Trump Voters Irrational?“ – Quillette

Key Papers of Keith E. Stanovich – Download page

Bio – Weblog Page

Further readings

Rational Thinking

Stanovich, K. E. (2004). The robot’s rebellion: Finding meaning in the age of Darwin. Chicago: University of Chicago Press.

Stanovich, K. E. (2009). What intelligence tests miss: The psychology of rational thought. New Haven, CT: Yale University Press.

Stanovich, K. E. (2010). Decision making and rationality in the modern world. New York: Oxford University Press.

Stanovich, K. E. (2011). Rationality and the reflective mind. New York: Oxford University Press.

Stanovich, K. E. (2021). The Bias That Divides Us: The Science and Politics of Myside Thinking. Cambridge, Massachusetts/London, England: The MIT Press.

Stanovich, K. E., West, R.F., Toplak, M. E. (2015). The Rationality Quotient: Toward a Test of Rational Thinking. Cambridge, Massachusetts/London, England: The MIT Press.

Moral Psychology

Greene, J. (2013). Moral Tribes: Emotion, reason, and the gap between us and them. New York: Penguin Press.

Haidt, J. (2001). The emotional dog and its rational tail: A social intuitionist approach to moral judgement. Psychological Review 108: 814-834.

Haidt, J. (2012). The righteous mind: Why good people are divided by politics and Religion. New York: Pantheon.